TABLE OF CONTENTS

Answer these 5 questions before submitting data requests | Census

Junaid is a research engineer at IBM Research where he builds and deploys machine learning models to learn interesting things about data. He has a background in product-oriented data science focused on startups. Junaid has a passion for helping non-data people learn more about their data to drive better decisions.

In this article, you'll learn some of the questions data owners (product managers and c-suite leaders, alike) should ask themselves about their data before submitting discovery requests to their data team (and how to answer them). These include:

- What do I want from my data?

- Does my data report the right things?

- Are there obvious sources of bias in my data?

- Can I enrich my data with outside sources?

- What seems like it could be related?

Like all data scientists, I’m often approached by product managers and C-level staff who have a data set they’d like analyzed for business intelligence. As we talk about their project, inevitably a familiar question from the data owner comes up: “We have all this business data, can you do something with this”?

While the answer is (generally) yes, I’ve realized that many data stakeholders and business users don’t realize what it is they’re asking for: Data discovery.

Unfortunately, if the data owner doesn’t know what they want out of their data, data scientists like myself can only be so effective in our data discovery work (no matter how good our data discovery tools may be). This isn’t a criticism (trust me, we love our business counterparts), but instead, an opportunity to close an all-too-common information gap.

This gap doesn’t just cost time and internal budget as your data scientists spend unnecessary time honing in on the purpose of the dataset but can cost even you more 💰 if your company has to bring in an external consultant as well.

By now, you may be asking yourself how you can be a better-informed owner of your data so you can get the most of your data scientists when you go to them with a request (thank you, in advance, from all of us fulfilling these asks).

Don’t worry - it doesn’t involve getting super technical. There are a set of certain basic facts and features you can learn about your data to help your data team out (which means more money saved and a faster turnaround time on your data requests).

In this post, I’ll outline a few key questions non-technical data owners can ask themselves before putting their request into the data team to make their collaboration more productive. If you work through these questions before submitting future requests for data discovery, you’ll better use your data analytics and data preparation resources so you spend less time going back and forth with data teams and more time making informed business decisions to positively impact your business.

Without further ado, here are five questions to ask yourself before submitting that ask to your data team.

1. What do I want from data discovery?

The question of “what do I want” may seem like an obvious one, but it’s easy to skip over on the way to your request.

Before you come to your data team with a dataset or data source for analysis, ask yourself: “What does a successful analysis outcome look like?” True, you may not know exactly what specific expectations you have of your data (that’s part of the reason for data discovery, after all), but if you can give some generalized ballpark details it’ll help your data scientist narrow in on a better outcome.

This first step helps you to identify what your data is about (e.g. do you have transactional data or profile data). Here are some starter questions:

- Is your data indexed by time?

- If so, is it daily, weekly or monthly?

- Are there any notable gaps in your data?

These are all helpful basic questions to understand as you formulate your discovery ask, and the answers to them will help inform the objectives of your data so you can easily set up the discovery task for your data team.

Let’s take a look at an example, let’s say you work for a supermarket and you have refunds and returns data for the past six months for a particular store. Your dataset has information about the products customers have returned, at which time of day, what their monetary value was, what product category they fall into (🔋, 👚, 🥫), and the brand of the returned product.

Before you hand this data over to your analytics team for deeper data analysis, you can identify some of the key learnings you’re interested in about your product line. Here are some sample questions you might consider:

- Would you like to know returns as a proportion of sales broken down by month?

- Are some product lines returned more frequently than others (electronics vs clothing)?

- Are there some brands that customers return more often than others?

- What are the most common reasons for returning goods?

When you take the time to understand the answers to this “what do I want” question, you can provide your data scientists with valuable framing for their discovery process (and make sure you get the answers you really care about at the end).

In our above example, you may want to reduce the average number of returns across all key product lines by 10%. In order to achieve this goal, we will have to identify the sources and causes of returns with our second question.

2. Does my data report the right things?

Hard truth time: Just because you have a lot of data, doesn’t mean there’s something useful in that data set.

I know, you spent a bunch of time pulling the data together and you need something to show your latest campaign or strategy is working. But it’s important to make sure your decision-making is based on your data, not seeking data findings to justify your business decisions (vanity metrics don’t do anyone any good).

Instead, work to uncover the metrics that really indicate whether or not your efforts are successful. That way you--and your data team--can work from an empirically accurate truth that will drive really, truly drive growth.

To ensure you’re getting at the data and metrics that you should measure, think about the key indicators you track and examine how many of them drive the behavior you think is important.

Let’s think back to our example. Here are some of the questions we might ask ourselves in order to ensure we’re getting at the right metrics to analyze data about our refunds:

- Do you have refund data on all product lines?

- If not, are there other product lines whose refund information would be useful?

- Is it reasonable to consider the product lines you do have data for to be problematic areas or is the real insight in data you wish you had?

- Have you recorded useful reasons for refunds?

- Having reasons such as product damage or product exchange is more helpful for managers than simply knowing that the “customer wasn’t satisfied”.

Extending these kinds of questions to your own use case will ensure the data you’re collecting and handing over to your data can be mined for actual gold, not just fool’s gold.

3. Are there obvious sources of bias in my data?

I’ll be honest: Figuring out if there’s an obvious source of bias in data is a hard question for even data specialists to answer, but it’s really worth considering and identifying before handing data over for data analysis.

Popular sources of biases can include regional bias, product type bias, gender/ethnic bias, and recency bias. However, just because your data has a bias doesn’t mean you need to scrap it. As a good steward of your data, you should know where biases lay so you can avoid making inferences and statements about your findings that may be unfairly drawn.

Returning to the refunds data example, let’s take a look at some of the biases we might encounter:

- Regional bias: If you only have data for a particular store then this needs to be taken into account and not generalized to the entire region or country as different people in different regions behave (you guess it) differently.

- Demographic bias: If your data is about people then you must ask if you represent the meaningful demographics properly and fairly across customer segments? (Note: Fair doesn’t mean identically sized segments but that your sample proportions should reflect population size).

We can uncover biases by asking questions like:

- Where does my data come from?

- Is it collected from surveys where people opt-in?

- What about the people who don’t opt-in or stop outside the store to answer questions?

- Can we really say that those customers we’ve heard from reflect the feelings of those who didn’t answer?

- When was data collected? (E.g. does the time of year play a role in how many people and what kinds of people responded?)

Here’s a funny example that happened to me: I was once approached in a panic by a product manager telling me a key metric was much lower than preceding months and we should look into this quickly and act. Once I dug in, I discovered the manager was only looking at the previous four months of data. Once I extended the historical window to 24 months, I discovered the metric exhibits a seasonal fluctuation which resembles what we were presently seeing (TL;DR the panic was completely unjustified and things were actually OK, to the relief of my product manager).

Examining your dataset for bias can avoid unnecessary panic like the case above, as well as ensure that you’re not designing campaigns and strategies that are based on unfounded/unfair assumptions that won’t be effective and can waste time and resources.

4. Can I enrich our data discovery process with outside sources?

Much like your work, data doesn’t exist in a silo. It’s most useful when integrated as part of an ecosystem. This is why most companies have data warehouses where large datasets are tied together to tell a comprehensive story of what is going on within the full context of their business. When you’re looking at a particular dataset, it’s important to keep in mind what similar or related data you have available that can augment your data to provide some extra context for the findings from it.

These questions require some creativity because they’ll be unique to your business, your use case, and your data. But here are some questions to get you started:

- Do we have data that complements our present dataset?

- Can we augment individual data items?

- Could you provide multiple years’ worth of data for reference?

In our example scenario, we might see that our product sales data could provide additional context to our refunds data, or that we could add in data about brands and hardware metrics to get a better understanding of what kinds of item features folks return more than others.

The answers to these questions are useful to any data scientist as they will let your data experts extend the actionable insights they can pull from your data to inform future areas of data discovery.

5. What seems like it could be related?

Here’s some inside baseball for you ⚾: One of the first questions data scientists often ask ourselves is how data could be related within a dataset.

Ultimately, all modeling and inferential statistics are concerned with identifying and quantifying relationships between variables. Sometimes, these relationships are obvious, and rudimentary data mining can bring these to light. Other times, we’ll need more advanced machine learning methods to flesh them out. Either way, my objective (and the objective of your data scientists) is to understand the network of relationships latent within data. Hopefully, we can bring into focus those relationships that can be leveraged for our own purposes.

So how do you, as a data owner, help with this process? Well, there is a myriad of possible relationships that can exist within a large multidimensional dataset (and only some are useful at the end of the day). This is why it’s important for you to look at your data and think about what type of relationships between variables could be useful or help you do your job better.

What this allows you to do is to generate hypotheses you’re interested in about your data, which can give the data scientist a starting point for what they should validate first. Without some starting guidance, this first step can eat up a significant amount of time in the discovery process.

Here are a couple of example questions you can think about to drill down further:

- Based on our prior experience, what might impact our variable of interest most?

- Do we know of some redundant data that can be discarded to help our data experts better process and analyze the data set?

For our store refunds example, we might see that time of year is a good indicator of refund volume, which tells our data scientists to look at seasonal trends. We might also find that some of our data is redundant--like the raw data of the pricing of a product and the refunded price--and we can get rid of some of it without impacting the outcome.

Answering these questions and bringing the answers alongside your data set will open a conversation between you and the data scientist. You’ll be better able to hypothesize about your data and generate follow-up theories that could be investigated later on.

Build a better relationship with your data (and your data team)

At the end of the day, better data helps you better listen to your customers and interpret what they’re telling you through their interactions with your product and campaigns. To get at high-quality data to fuel this conversation with customers, you need to invest in having productive conversations with your data team (it’ll make everyone happier, trust me).

Data discovery isn’t a hands-off, “see what you can do” process between a data owner and a data scientist, but rather an opportunity to collaborate and come up with data insights that make your life easier, your strategies more efficient, and your customers happier. By answering these five questions before you take your dataset to your data team for analysis, you’ll set everyone up for better and faster business processes.

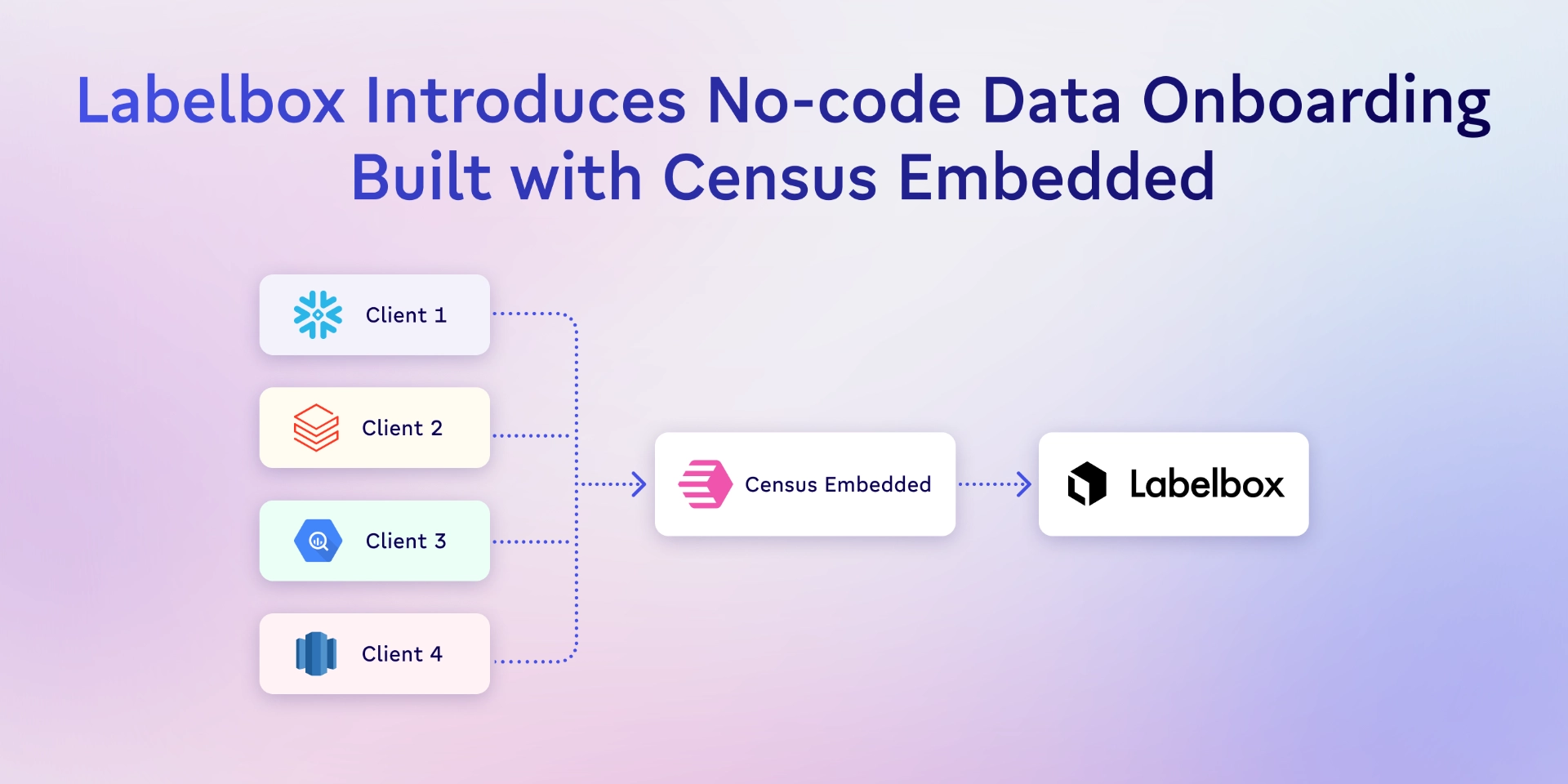

And if you really want to make your data team happy, you should consider a tool like Census. 😍 Census lets you and your and data team seamlessly send data everywhere from A to B, without engineering favors or countless custom solutions, and empower user-friendly, self-service business team use cases. This means they’ll have more time for exciting modeling and advanced analytics projects and you’ll have real-time access to better data. Win-win. 💪