TABLE OF CONTENTS

CDP or Data Warehouse? The best CDP is already in your DW | Census

Syl is the Head of Growth & Operations at Census. He's a revenue leader and mentor with a decade of experience building go-to-market strategies for developer tools. San Francisco, California, United States

There’s a customer data platform (CDP) lurking in your data warehouse. With the right lightweight (and even free) tools, you can coax that CDP out of your data warehouse without needing to invest in an expensive CDP solution.

The core purpose of a CDP is to help businesses collect and use customer data. Put plainly, a CDP is a database for customer data. You generally have a choice of buying a CDP “off the shelf” from the likes of mParticle, BlueConic, and Treasure Data, or building your own solution out of your existing data infrastructure.

Choosing between these two options is tough for many businesses. But the truth is, with a good data infrastructure, you might already have a CDP or the capability to build one to suit your specific needs.To understand why, we need to discuss the role of CDPs, and the CDP industry as a whole.

CDPs are good

So, what the hell is a CDP, and why would you want one? Well, a CDP is essentially a database for customer data with a few bells and whistles. Within your tech stack, CDPs generally sit between your CRM and your other marketing automation tools.

There are three things CDPs help with that make them decent investments:

- Data collection: CDPs collect customer data (usually first-party data) across many touch points.

- Data management: CDPs serve as a central place for customer data to pass through, which allows them to clean and standardize it all.

- Data governance: Data privacy regulations like the GDPR and the CCPA are huge, hairy issues we all have to deal with. CDPs help companies manage their first-party data in a compliant way.

If you really want to dive deeper (don't do it), you can go to the CDP Institute and get lost in pages and pages of CDPI trying to nail down what, exactly, a CDP is.

... But off-the-shelf CDPs aren’t great

In the past five years or so, there’s been a whole slew of platforms that looked at these pain points, then looked at their technology, and went full Scrooge McDuck.

But this gold rush isn’t great for buyers when a young, complex industry gets inundated with a bunch of money-grubbing anthropomorphic ducks (metaphorically speaking, of course).

When investing in an off-the-shelf CDP solution, you need to be aware of three main issues caused by the constantly shifting CDP market:

1. They’re ill-defined

The CDP industry is around seven years old. Tools like AgilOne (now Acquia CDP) and Tealium have been around for longer, but they weren’t what we would think of as CDPs today. The industry didn’t coalesce around the modern definition of “CDP” until 2013.

That means the first Avengers movie is older than every CDP on the market.

Because the industry is so young, it’s populated by a spectrum of vaguely related tools that generally help businesses collect and use customer data. And with the recent acquisition of Segment by Twilio (for $3.2 billion!), we might see a lot of companies try to weasel their way into the industry.

Here’s how Michael Katz, mParticle’s founder and CEO, puts it:

These days, buying a CDP means buying a set of vaguely related customer data tools. When you buy one of these platforms, it can be hard to know exactly what you’re getting. Initiatives like the CDP Institute help, but the industry is still far from mature.

2. When they try to define themselves, they get it wrong

All this ambiguity makes it hard to determine which off-the-shelf platform is a “true CDP.” A lot of this confusion comes from their role in data infrastructure.

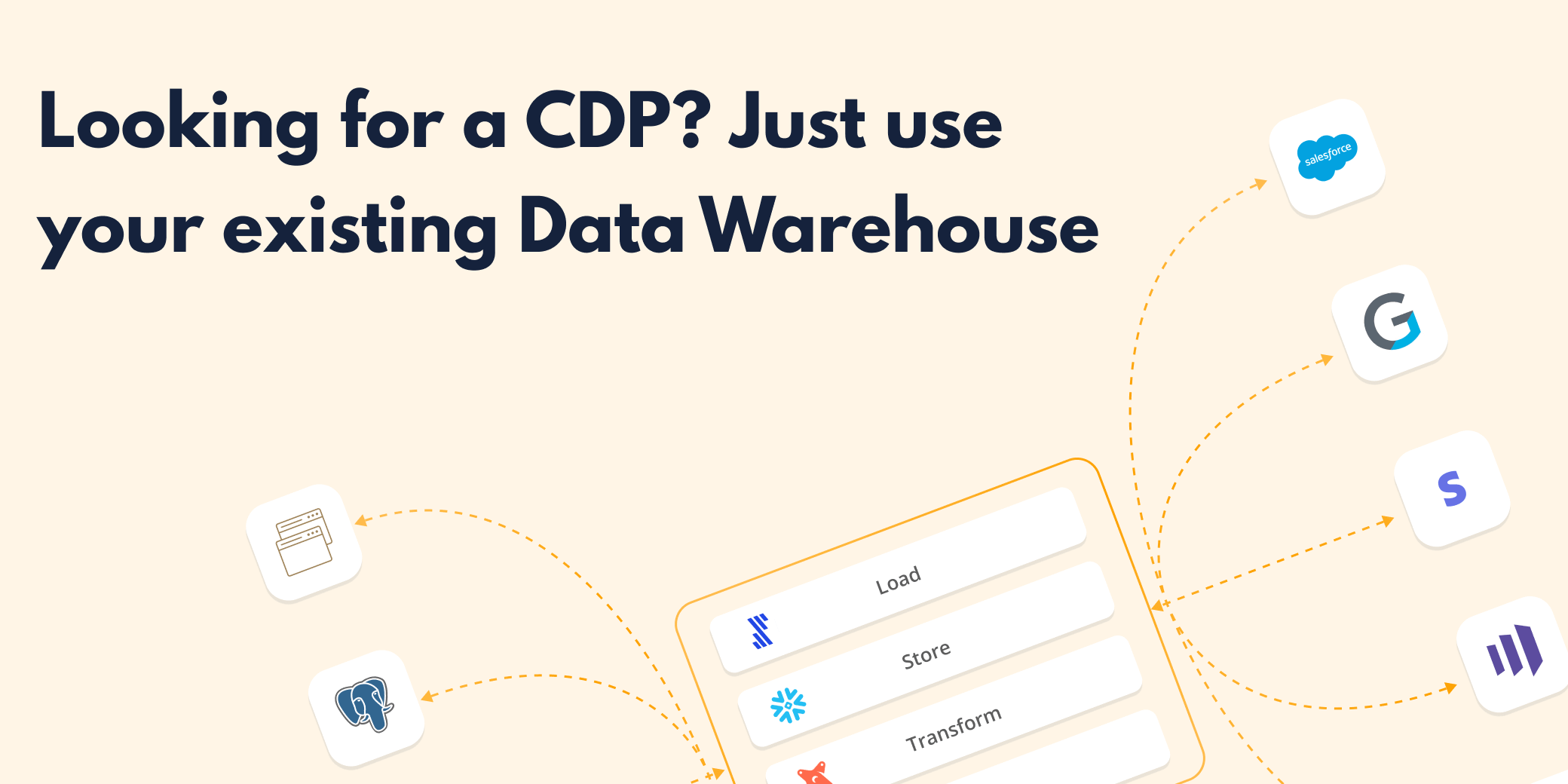

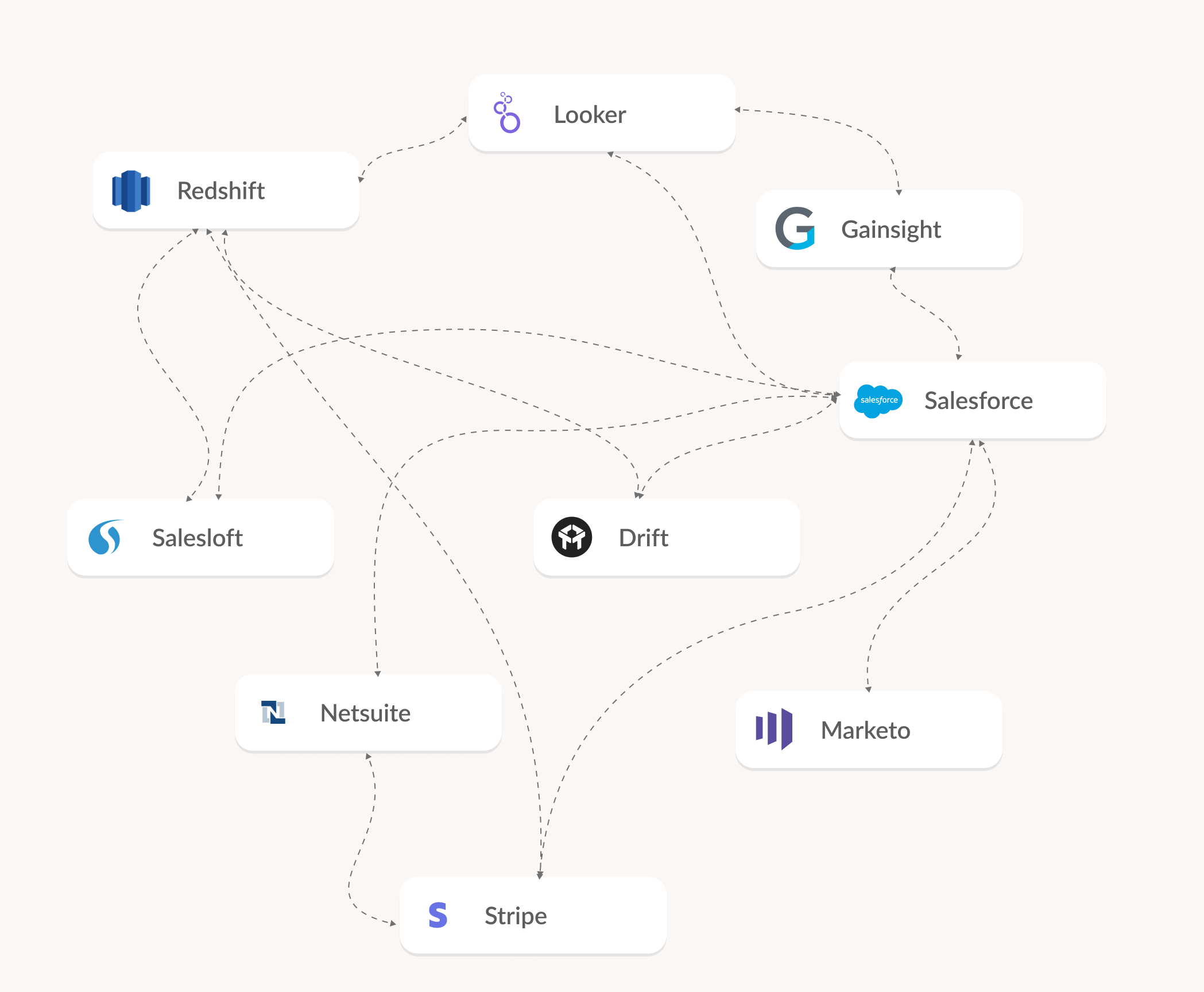

A good data infrastructure is centered on a hub. From this hub extend various spokes that send data into the hub or pull data out. The hubs should always be a data warehouse or a data lake (as the bright minds at Andreessen Horowitz describe here).

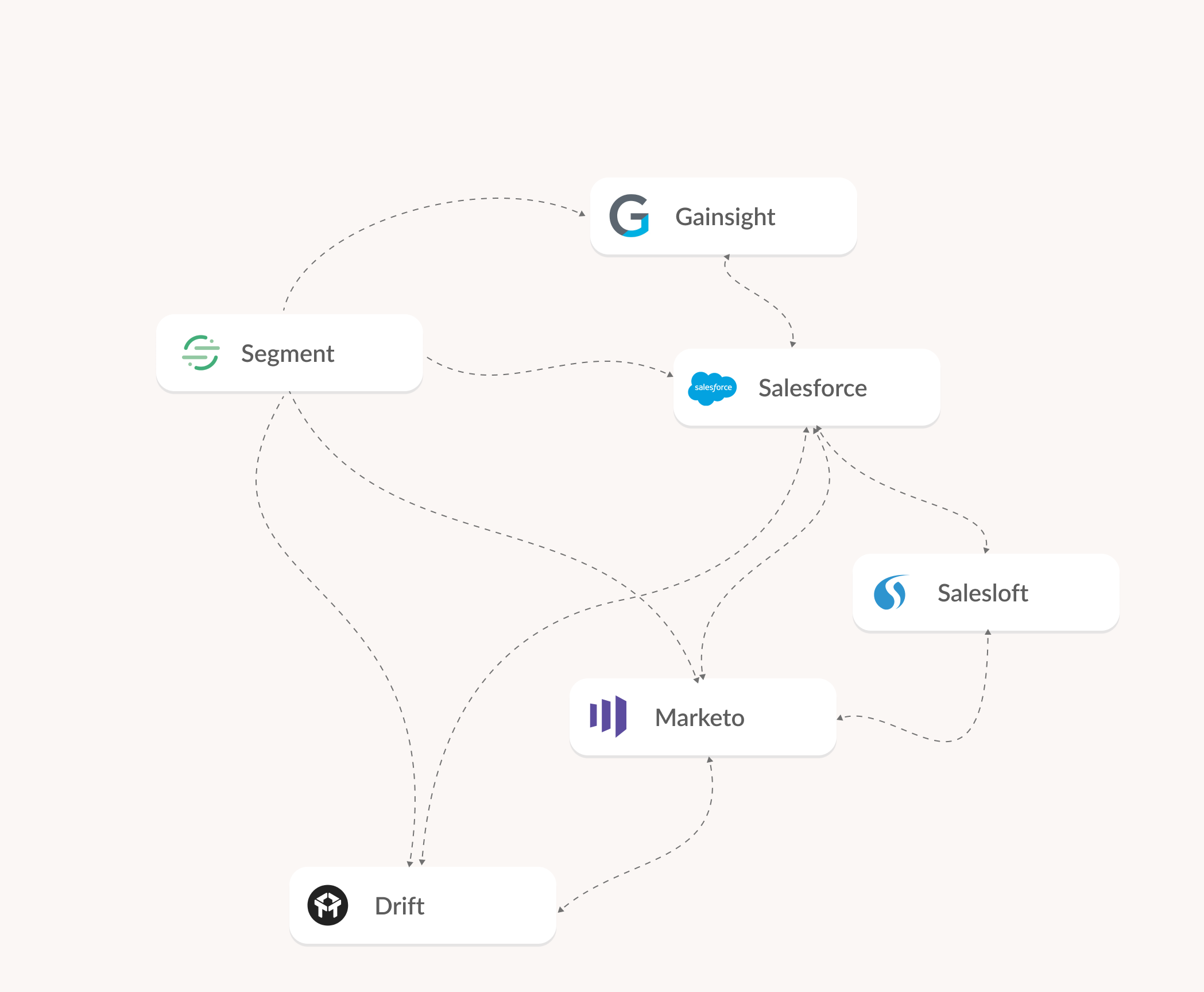

Without this central hub, data infrastructures often turn out closer to this web:

But if you listened only to the marketing copy of some off-the-shelf CDP solutions, you might be led to think otherwise. Often, you’ll find terms like “single source of truth” thrown around.

For instance, mParticle says they have “the ability to unify records into a single source of truth.” And Segment says it can help you “create a single source of truth.”

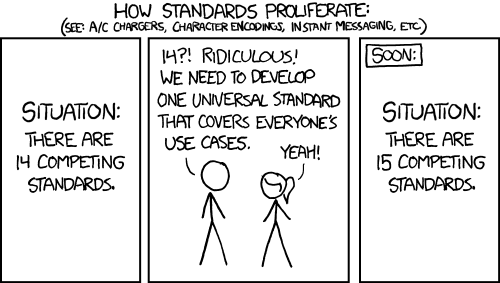

But a new single source of truth falls into the “new standard” fallacy, as immortalized by this XKCD comic:

Applied to a data infrastructure not centered on a central hub, a CDP essentially becomes a larger node in the web above:

A good example of why a CDP is a larger node and not a hub/single source of truth is the world of business intelligence (BI). BI platforms pull data from data warehouses using SQL.

They will never pull data from a CDP, because it cannot host all the data a BI platform might need access to. And the data it does host is not organized in a way that’s easily accessible.

CDPs aren’t a hub or a “single source of truth.” And they never will be, no matter what their marketing copy says.

3. They can lock you in

Off-the-shelf CDPs can be expensive. And when you buy into an off-the-shelf CDP, you’re also buying into their philosophy on handling data.

Buying into another tool’s data philosophy can have massive ramifications for not only how to collect and manage data now, but for the foreseeable future. And what if this CDP you’ve invested all this time and money into getting up and running gets bought?

It’s a trade-off. Buying a pre-packaged CDP solution means you’ll have to give away some of your control over how you collect data. If that’s something you can stomach, then go for it. If not, you’ll need to look elsewhere.

How Your Data Warehouse Can Become Your CDP

If you have a good data infrastructure (i.e., hub-and-spoke) and a decent data team, your data warehouse probably already has many of the features an off-the-shelf CDP would provide. Here’s a high-level overview

Data collection

With a good data infrastructure, all your data will end up in your data warehouse anyway.

- Use an ETL tool like Fivetran to load third-party data into your warehouse.

- Segment is very good at event tracking and collecting first-party data; just don’t rely on it as your hub. Otherwise, you can look into Snowplow (open-source and caters to data teams) or Freshpaint (if auto-tracking is your thing).

Data management

Your warehouse then stores all this data to your specifications. The important distinction here is that you shouldn’t think of your CRM or end-point marketing tools as data platforms. These tools are endpoints and should only generate or consume data, not transform it.

You should manage and transform your data with your data warehouse. Use it to isolate central definitions and technical logic away from your endpoints. This will make it easier to do tasks like identity resolution and attribution with a little help from a few lightweight tools:

- Use Snowflake or BigQuery for a data warehouse.

- Use dbt to perform transformations and make your customer data usable.

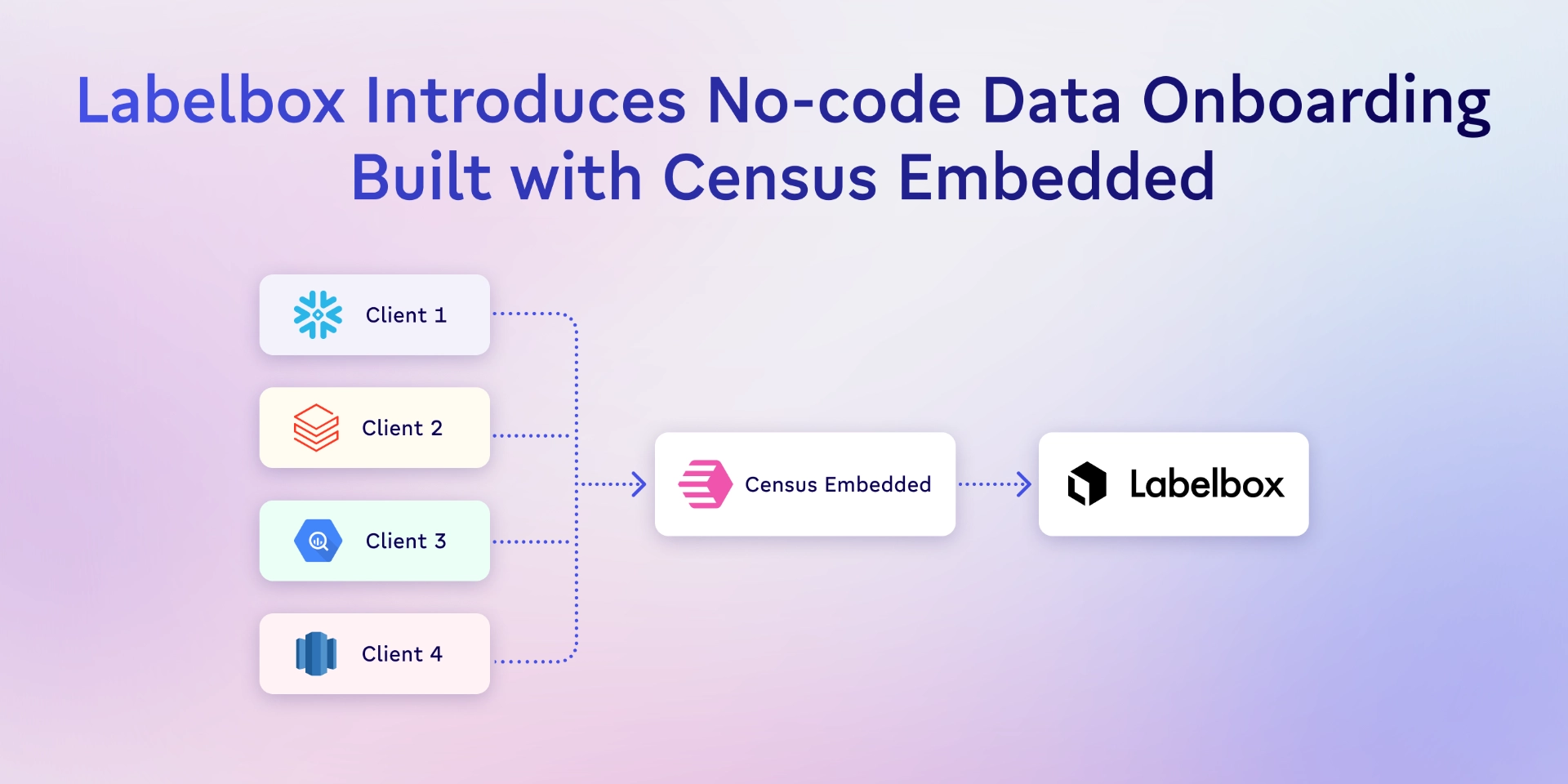

- Use Census to send that data exactly where it needs to go.

Data governance

A good hub-and-spoke data infrastructure provides more direct control over all your data so you can ensure compliance. Leveraging an off-the-shelf CDP means, to some extent, relying on them to get data governance right.

Figma was able to use a solid data infrastructure to build a CDP solution for identity resolution. They collected all they knew about their customer in their data warehouse. Then, they enhanced that data with Clearbit, filling in any knowledge gaps with third-party data. With all that data in their warehouse, they then used Census to send it directly into Salesforce.

Now, whenever the Figma sales team pulls up information on a prospect, they’ll receive an overview of every interaction that prospect has had with Figma.

The process of turning your data warehouse into a CDP is far more nuanced than what we’ve laid out here. We’re just giving an overview of what’s possible and how. We’re down to chat if you want to go deeper.

Your Own Unique CDP Solution

This approach of turning your existing data infrastructure into a CDP solution might be exactly what your business need. It’ll address all the pain points off-the-shelf CDPs address, without any of their drawbacks. You’ll be able to:

- Tailor your CDP solution to your exact needs. With tools like dbt and Census, you can make your data warehouse work exactly the way you need it to.

- Create a more sound data infrastructure. CDPs only manage customer data. With a proper infrastructure for all of your data using a warehouse, data governance across all your tech stack is much easier. You’ll also have a solid foundation to scale using a hub-and-spoke method.

- Be more flexible. Relying on lightweight and relatively cheap tools (both dbt and Census have free plans) means the up-front cost is negligible, especially compared to off-the-shelf CDPs. And you’ll be glad you have this flexible stack instead of having to talk to a CDP customer support rep or salesperson that may tell you, “Our tool can’t do that, but that’s because you’re doing it wrong.”

If you’d like to learn more about how Census can help you build your own CDP solution out of your existing data infrastructure, schedule a demo, and we’ll talk you through it.